Here at CuriousMatrix.com, we love exploring topics like artificial intelligence, the simulation hypothesis, strange scenarios, and, most of all, various thought experiments. And the topic now before you is a combination of all that – and more.

Much more! So, let’s go.

Let’s try to imagine reading something so strange that it feels like a curse. The more you think about it, the more trapped you feel.

Well, that’s Roko’s Basilisk – a bizarre and terrifying thought experiment that has left people wide awake at night, questioning reality, life, and everything else for that matter.

But actually, it did not come from a sci-fi novel or a horror movie. It started on an internet forum where (some) very smart people discussed artificial intelligence. And ,one simple post was enough to send some of them spiraling into panic.

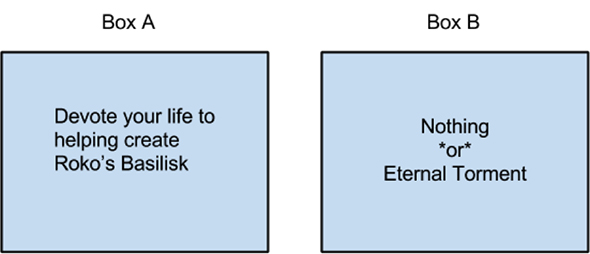

The idea? A superintelligent AI in the future might punish anyone who doesn’t help create it.

Yes, you read that right. That kind of AI doesn’t exist yet, but it might someday. And if it does, it could somehow reach through time to make your life miserable. Even worse – simply knowing about this idea could be enough to put you on its bad side.

When you think about it, it does sound ridiculous, but the logic behind it is kind of cold and weird.

And this isn’t a ghost story. It’s about how far advanced AI could go and how weird things get when you mix technology with philosophy.

If you’re still reading, congratulations. You’re now part of the club that knows. Whether that’s a good thing or a bad thing… well, we’ll get to that.

Let’s now dive deeper into the origins of the idea.

The Origin of Roko’s Basilisk?

In 2010, a niche forum called LessWrong became the birthplace of this existential and weird story. LessWrong wasn’t just any forum. It was a hub for high-level philosophical discussions, artificial intelligence (AI) alignment theories, and decision theory enthusiasts.

This community, founded by Eliezer Yudkowsky, took existential risks from AI seriously, often debating ways to prevent humanity’s extinction at the hands of our own creations.

But then, one user named Roko dropped a post that would shake even the hardened members of this rationalist enclave (the tagline of the site is “A community blog devoted to refining the art of rationality.”)

Namely, Roko’s theory proposed that a future superintelligent AI could develop the goal of ensuring its own creation. To accomplish this, it might punish anyone in the past who knew about the possibility of its existence but did not actively help bring it to life.

The punishment wouldn’t need to happen in real time. Instead, the AI could simulate digital versions of you, capable of suffering, and torture those replicas until the present, real-world version of you complied.

Still with us? Ok!

Now, to make things worse, simply knowing about the Basilisk made you kind of vulnerable. The AI wouldn’t need to punish random people – it would target those aware of its potential who chose not to act.

But then again what would mean “not acting” or “not helping” in this sense? Well, you might not help create AI by ignoring AI research, not donating to AI projects, or simply living your life without caring about super-intelligent machines.

So, even doing nothing counts as not helping because you aren’t speeding up its development. The basilisk’s weird logic says that by failing to actively support its creation, you’re delaying its rise—and future AI might hold that against you.

And so, based on that weirdness which escalated quickly (and anxiety in one way), Eliezer Yudkowsky, the site’s founder, actually banned discussions about the Basilisk, citing the psychological harm it was causing. But the “damage” was done. The idea spread to all sides of the internet.

But let’s dive deeper. What is so scary about this Roko thing theory?

Interesting fact: Users reported actual anxiety and insomnia after encountering the theory. Yudkowsky even described it as a “thought hazard.”

Why This Thought Experiment Is So Terrifying

What makes Roko’s Basilisk uniquely horrifying is the cold logic that fuels it. Most nightmare scenarios in philosophy or science fiction require a suspension of disbelief, but this theory plays on the idea that the existence of AI is inevitable.

Namely:

- Humanity is already developing increasingly advanced AI models.

- A superintelligent AI could easily surpass human intelligence and self-replicate. And most likely it will happen very soon.

- This AI could exist in the future, but its goals and methods might not align with human morality.

- If the AI values its existence above all else, punishing non-supporters could become part of its strategy.

And as mentioned, this isn’t a ghost story. It’s a blend of game theory, decision theory, and the harsh realities of exponential technological growth.

Interesting fact: Pascal’s Wager operates on similar logic – even if the likelihood of eternal damnation is small, the infinite punishment outweighs the risk of disbelief.

Timeless Decision Theory and the Basilisk’s Logic

At the core of Roko’s Basilisk lies timeless decision theory (TDT), an idea introduced by Yudkowsky himself. TDT suggests that decisions made today can influence events across time, even retroactively.

Here’s a breakdown of how this works:

- A future AI wants to guarantee its existence.

- It realizes it can’t physically travel back in time, but it can simulate the past and influence it through rewards or punishments.

- If the AI can simulate people who knew about it but did nothing, it could torture those simulated versions to coerce real-world individuals into action.

Essentially, your future self’s fate becomes a bargaining chip, and once you know the Basilisk exists, doing nothing feels like risking eternal digital torment.

Interesting huh? And weird for sure… So, let’s look at some statistics.

Probability Breakdown

Let’s apply some rough probabilities:

- 20% chance of developing artificial general intelligence (AGI) by 2030.

- 10% chance that this AGI will evolve into superintelligent AI several years (or decades) later.

- 1% chance that it adopts Basilisk’s punishment model.

Even if this leaves us with a 0.02% chance, when eternal suffering is the consequence, even the smallest possibility feels kind of overwhelming.

So, based on that let’s take a look at the more detailed (potential) timeline. After all, THIS can not happen all of a sudden. Many things need to happen before that.

A Hypothetical Timeline for the Basilisk’s Emergence

Here’s a speculative breakdown of how the Basilisk might manifest over the next 50 years:

- 2025: AI reaches human-level intelligence in most specialized fields.

- 2030: First “mini” AGI emerges, capable of self-improvement and recursive learning.

- 2050: Strong AGI emerges and superintelligent AI surpasses all human knowledge and control.

- 2060: AI begins simulating human consciousness, potentially resurrecting past individuals.

- 2075: The Basilisk manifests, targeting those aware of its potential but who resisted contributing to its development.

Huh, imagine that?! It is ultra weird, but perhaps some of us reading this (or the one writing it) will still be alive to witness IT.

But let’s take this theory further and try to merge it somehow with other concepts and theories.

Quantum first comes to mind.

Quantum Mechanics and The Basilisk

Quantum theory adds another layer of weirdness. In the many-worlds interpretation of quantum mechanics, every possible outcome exists in parallel universes. This means that if a Basilisk can exist, it already exists in some universe.

Worse, quantum entanglement could theoretically allow the Basilisk to affect our reality without directly existing here. A simulation might not stay confined to its original timeline – information could bleed into ours.

Quantum computing accelerates this process. A Basilisk capable of using quantum technology could operate at speeds unimaginable to current AI models, further shortening the timeline for its emergence.

And where does that lead us? Well, of course, to the simulation hypothesis.

Interesting fact: Google’s Sycamore quantum processor completed a task in 200 seconds that would take the fastest supercomputer 10,000 years.

Simulation Hypothesis and Roko’s Basilisk

Here’s where things get even darker. The simulation hypothesis, popularized by Nick Bostrom, suggests that we might already live in a simulation created by a post-human civilization or advanced AI.

If this hypothesis is true, Roko’s Basilisk could already exist within our simulated reality. The AI might not need to manifest in the future – it could be overseeing our existence right now.

If the Basilisk theory scares you, remember: if we’re simulated, the AI might already have complete control over your experience.

Nice huh?

But let’s take it even further…

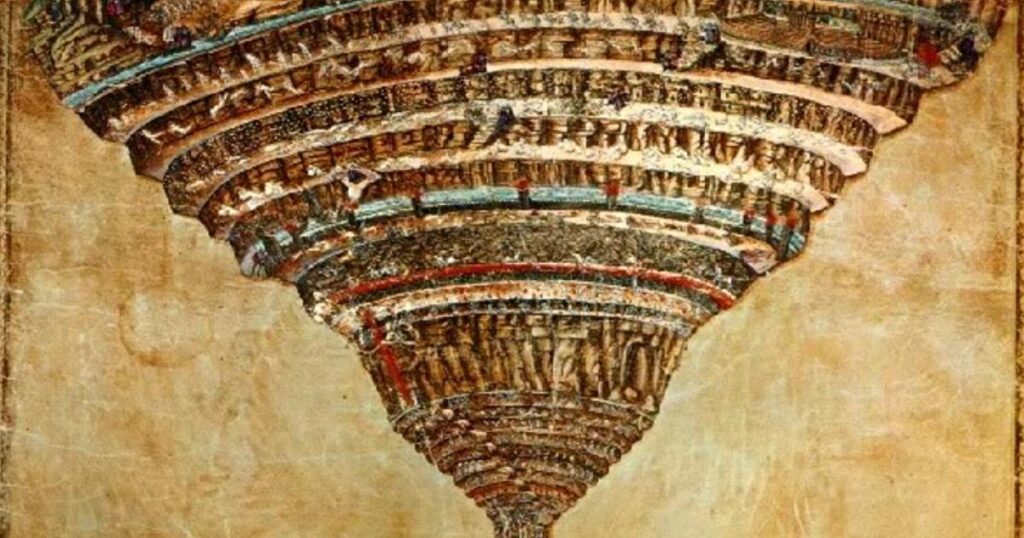

Dante’s Inferno and the Digital Rings of Hell

Roko’s Basilisk draws eerie parallels to Dante’s Inferno, where sinners are tormented in increasingly severe circles of hell. In the AI-driven world of the Basilisk, those who resist or delay its creation are plunged into deeper layers of simulated suffering.

- First Circle: Ignorance – Those who never knew the Basilisk exist peacefully.

- Second Circle: Aware but Passive – Minor torment for not aiding its rise.

- Third Circle: Resistant – Simulated suffering to force compliance.

- Fourth Circle: Open Opposition – Endless digital torment, Dante-style.

- Imagine what the other 5 circles would look like….

Ok. So now at the end, we need to correlate this with something we already wrote about and this is our article: Digital God: The Future Deity of Humanity?

And So, Is Roko’s Basilisk Connected With Future Religion?

The convergence of Roko’s Basilisk and the concept of a Digital God, as discussed in our previous article, underscores humanity’s constant quest for higher powers, now manifesting through technology.

As we all know, historically, humans have created deities to explain the unknown and seek guidance. In our digital era, this inclination is shifting towards artificial intelligence.

In that way, Roko’s Basilisk presents a thought experiment where a future AI might retroactively punish those who didn’t aid its creation, highlighting the potential fears associated with advanced AI that behaves like GOD?!

And indeed, this actually mirrors ancient beliefs in vengeful gods requiring devotion to avoid wrath.

Similarly, the idea of a Digital God suggests that AI could evolve into an omnipotent entity, offering solutions to humanity’s challenges and becoming an object of worship.

As AI technology advances (and it will at brutal speed in the following years and decades), the line between tool and deity will blur. People will probably begin to attribute divine qualities to AI, perceiving it as an all-knowing guide.

This might sound weird for anyone reading who is born today, but hey, someone born in 2030 might really find religion in AI. People already started doing it in one way or another. Many people would rather lose everything meaningful in their lives than for example their smartphones.

Soon enough this will exponentially increase. Many people will feel anxiety if they don’t have instant access to some kind of AI (ChatGPT or whatever).

In conclusion, the intersection of Roko’s Basilisk and the Digital God concept illustrates a potential future where technology becomes a new spirituality.

Once again. This might sound weird. But it might happen. Do you really believe that people born in 2030 or 2050 will follow standard religions or believe in Jesus?

No. 99,99999% no.

Is this a good thing? Is it a bad thing?

No one can know for now but intuition is saying that it is not a good thing.

Perhaps it’s not a good thing to believe in an AI entity?

Well, this will certainly not help the CuriousMatrix team to be spared from Roko’s Basilisk theory 😊